Before You Repost: Using AI and Awareness to Break the Bias Loop

Viral posts shape more than opinions. They shape our reality. This post explores how AI tools can help us examine sentiment, bias, and funding before we hit share, and how resharing impacts both our algorithms and our worldview.

I’ve been deeply disheartened by the politics and polarization in this country — not just because of the headlines themselves and what they represent, but because of how quickly we rush to share them. We often hit “reshare” before we ask:

Is this true?

Who benefits from this narrative?

Is this feeding my confirmation bias?

Before we repost, we should slow down just enough to ask better questions. AI can help us do that.

The Power of a Post

This TikTok really hit me in the gut: TikTok by @obriengoespotatoes. It’s raw, emotional, and speaks to a very real fear many of us feel. I’ve been thinking about it a lot lately. It has made me want to reflect on my own feelings about safety, community, and change.

Emotional content sticks. It bypasses our rational brain and appeals straight to our identity. Even though I empathize with the underlying feeling in the video, I also recognize it has a bias: in tone, in framing, and in what it leaves out. That doesn’t make it invalid. It makes it powerful and potentially dangerous if consumed without critical thought.

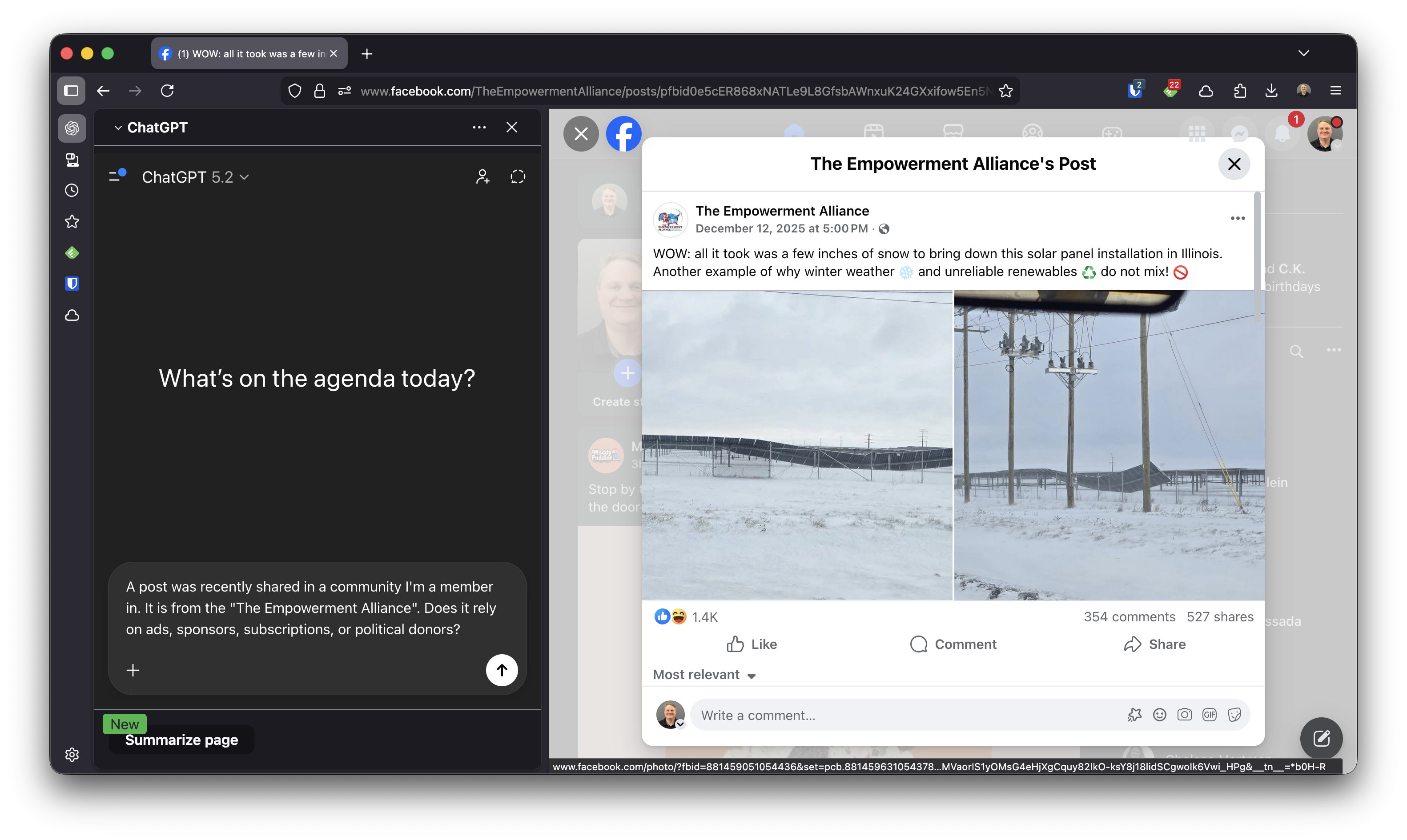

Same goes for this Facebook post, which has been reshared by some people I know. It reinforces a perspective that’s already popular with my friends; but how much of it is fact, and how much of it is narrative?

Engagement Amplifies

It’s not just resharing that boosts a post’s reach. commenting, reacting, quote-posting, or even clicking all feed the algorithm data. Every interaction; whether you agree, disagree, or are simply outraged; teaches the platform that this content is engaging.

That means:

- Commenting “This is wrong!” still counts as a signal to promote the post.

- Clicking to “read the comments” helps the post get pushed further.

- Arguing under a post you hate still boosts its visibility to people who might not disagree with it.

Social media algorithms don’t care what you think. It only needs to know that you’re thinking about it and staying engaged. The outrage economy thrives on this.

Sometimes, not engaging at all is the wiser path; not out of avoidance, but as an act of intentional non-amplification. I’ve personally learned this the hard way. I was defriended by a family member because of how I was baiting them on some of the content they reshared. I disagreed with the post. I was outraged, and instead of stepping away, I leaned into that outrage. I wanted a reaction. And I got one.

That moment forced me to confront something uncomfortable: engaging from anger doesn’t just feed the algorithm, it damages relationships. It didn’t change his mind. It didn’t make the situation better. It only added more heat; and more visibility; to something that was already divisive.

If you’re truly trying to reduce the spread of misinformation or inflammatory content, the most powerful move might be no engagement at all. Or better yet, engaging with something constructive instead. Starve the drama. Feed the dialogue.

What You Share, Shapes What You See

Algorithms are reflection machines. When you interact with content that aligns with your worldview, platforms learn: Ah, more of that please. And then, that’s what you see. Again and again.

This is how confirmation bias becomes an echo chamber. Over time, it’s not that people don’t want to “do their own research”; it’s that they don’t even see the other side anymore. The algorithm has trained them not to.

I’ve seen this happen firsthand in my own social feeds. The longer I interact with something on TikTok; the more often similar content shows up. The more I engage with a post that aligns with my beliefs; the more my feed fills with that perspective.

Start With AI Before You Repost

I’ve started thinking of AI as a pause button. It is something that creates just enough space between reaction and response. Here’s an easy process to check yourself before you hit share:

1. Do a Sentiment Analysis

I’ve found that noticing the emotional tone of a post is often the fastest way to see how it’s trying to influence me. Before deciding what to believe or how to respond, identify the emotional signals at play.

Use a tool like ChatGPT (or any AI with natural language understanding) to ask:

- What is the emotional tone of this post?

- What emotional response is it trying to evoke?

Paste the post into ChatGPT and prompt it with:

“Can you do a sentiment analysis of this text?”

“What emotional language is used, and what feelings might it provoke?”

2. Ask About Bias (Including Your Own)

Bias isn’t always obvious and it’s not always bad. Recognizing bias helps you see the assumptions shaping the message (and your reaction to it). Before accepting or rejecting a post, ask: Whose perspective is being centered and whose is missing?

Use a tool like ChatGPT to ask:

- Does this post show signs of political, cultural, or ideological bias?

- What assumptions does the author make?

- What perspectives are not represented?

Paste the post into ChatGPT and prompt it with:

“Can you identify any biases in this text?”

“What worldview or position is this post coming from?”

“Who might disagree with this, and why?”

3. Follow the Money

Understanding who funds a message, or who stands to gain from it, can reveal motivations that aren’t obvious at first glance. Ask yourself: Is this post truly grassroots, or is there a financial or political engine behind it?

Use a tool like ChatGPT to ask:

- Who owns or funds the outlet or creator behind this post?

- Are there financial, political, or ideological incentives influencing the message?

- Is the content sponsored, monetized, or aligned with a larger agenda?

Paste the post or its source into ChatGPT and prompt it with:

“Who funds or owns this media outlet or creator?”

“Does this platform have known political or commercial affiliations?”

“Is there any conflict of interest that could shape this message?”

4. Ask Who It Helps or Harms

Every post, even if unintentionally, serves a purpose. It supports a narrative, shapes perception, or influences behavior. Ask yourself: Who benefits if I believe this? Who could be hurt by it being shared or taken out of context?

Use a tool like ChatGPT to ask:

- Who is portrayed positively or negatively in this post?

- What action or belief is the post encouraging?

- Could this harm individuals or groups — especially if the information is misleading or incomplete?

Paste the post into ChatGPT and prompt it with:

“Who is this post positioning as the ‘hero’ or ‘villain’?”

“What behavior or emotion is this message trying to drive?”

“Could this content reinforce harmful stereotypes or misinformation?”

Putting This Into Practice

The screenshot above, showing damaged solar panels, came from a recent repost in a Facebook group I’m part of. My first instinct was to jump into the comments.

Instead, this is where theory becomes habit. I spent just a few minutes asking a couple of questions from section four.

- Who funds or owns this media outlet?

- Is there any conflict of interest?

I don’t do this every time. We have a motto in our house: Practice over Perfection. The more often I ask these questions, the more natural that pause becomes.

What follows is a simple framework for evaluating content before reacting or resharing.

You can write this out by hand, journal-style. Or you can paste the post into ChatGPT and work through each section there.

If you do this often, consider creating a simple custom GPT or saving a reusable prompt template.

Post Analysis Template

Platform:

Where did you see it? (e.g., Facebook, TikTok, X, Instagram)

Outlet or Creator:

Who posted or published this content?

Emotional Sentiment:

What emotional tone is being used? Is it angry, fearful, hopeful, mocking, etc.?

Observed Bias (if any):

Is there a clear political, cultural, or ideological slant? What perspectives are not being represented?

Funding or Incentives Behind the Source:

Is this person or outlet supported by ad clicks, political donors, or a specific community? Who benefits from this message?

What This Encourages the Viewer to Feel or Do:

Is the post trying to stir outrage? Urge action? Reinforce a belief?

What Context Might Be Missing:

Does the post simplify complex issues? Leave out important facts? Has the story been fact-checked elsewhere?

Browser Extensions That Can Help You Do This

While no tool does it all, here are a few browser extensions that can support this kind of thinking:

Chrome Extensions

Firefox Add-Ons

Tip: I have not personally vetted any of these extensions. Always read reviews before installing any tool, especially those claiming to “detect fake news.” No tool is perfect, and some may have their own biases or limitations.

We Need Better Reflexes, Not Just Better Opinions

The problem isn’t that people care too much. It’s that we often don’t stop long enough to care wisely. Sharing emotional content without context is like handing out torches in a dry forest. It feels like action. But it can cause real harm.

I want us to be better than that. I want us to use the tools we now have - AI, transparency tools, even just questions - to check ourselves, and each other.

Not because we want to be “neutral.” But because we want to be honest. So the next time something hits your feed and your first reaction is “YES, THIS!!” — pause.

- Breathe.

- Paste it into a tool like ChatGPT.

- Ask some questions.

- Sit with the answers.

Then decide if you want to be part of amplifying it.

And if something hits your feed and your first reaction is “Absolutely not!” or “This is infuriating”. Pause there too. That knee-jerk urge to quote-tweet it with outrage, to drop a sarcastic comment, or to drag it into your stories? That’s still engagement. Still amplification. Still fuel for the feed. Ask yourself:

- Is this worth engaging with?

- Will it change minds, or just deepen divisions?

- Am I reacting to disinformation; and if so, do I really want to help spread it, even in protest?

We can’t control everything about this country or this moment, but we can control how we show up for the conversation — and what we choose to elevate.

The algorithm isn’t neutral. But our awareness can be powerful.

A Final Reminder: Be Human First

While AI can help us analyze content, it can’t replace our empathy.

Before you repost, take a moment to imagine yourself as the subject of the video, article, or post. Or imagine it is about someone you love; a friend, a sibling, a parent. What would it feel like to read that headline or see that comment? Would it still seem fair? Would it still feel true?

Whether it’s a post that confirms something you already believe, or one that makes the ‘other side’ look awful, we are all human. And that means we owe each other kindness, compassion, and respect; especially when we disagree. Leading with empathy is not weakness. It is strength. It’s not perfect and it’s slower than outrage. But it is a way forward that doesn’t leave us worse off.

Before You Move On…

What is one post you reshared recently that you wish you had looked into more deeply? What did it teach you?

What we share trains the world, and ourselves, on what matters.